MobileNet V2 Trained on ImageNet Competition Data

Identify the main object in an image

Resource retrieval

Resource retrieval

Get the pre-trained net:

In[]:=

NetModel["MobileNet V2 Trained on ImageNet Competition Data"]

Out[]=

NetModel parameters

NetModel parameters

This model consists of a family of individual nets, each identified by a specific parameter combination. Inspect the available parameters:

In[]:=

NetModel["MobileNet V2 Trained on ImageNet Competition Data","ParametersInformation"]

Out[]=

Pick a non-default net by specifying the parameters:

In[]:=

NetModel[{"MobileNet V2 Trained on ImageNet Competition Data","Depth"1.,"Width"224}]

Out[]=

Pick a non-default uninitialized net:

In[]:=

NetModel[{"MobileNet V2 Trained on ImageNet Competition Data","Depth"0.75,"Width"224},"UninitializedEvaluationNet"]

Out[]=

Basic usage

Basic usage

Classify an image:

In[]:=

pred=NetModel["MobileNet V2 Trained on ImageNet Competition Data"]

Out[]=

The prediction is an object, which can be queried:

In[]:=

pred["Definition"]

Out[]=

male peafowl; having a crested head and very large fanlike tail marked with iridescent eyes or spots

Get a list of available properties of the predicted :

In[]:=

pred["Properties"]

Out[]=

Obtain the probabilities of the ten most likely entities predicted by the net:

In[]:=

NetModel["MobileNet V2 Trained on ImageNet Competition Data"]

,{"TopProbabilities"10}

Out[]=

An object outside the list of the ImageNet classes will be misidentified:

In[]:=

NetModel["MobileNet V2 Trained on ImageNet Competition Data"]

Out[]=

Obtain the list of names of all available classes:

In[]:=

EntityValue[NetExtract[NetModel["MobileNet V2 Trained on ImageNet Competition Data"],"Output"][["Labels"]],"Name"]

Out[]=

Feature extraction

Feature extraction

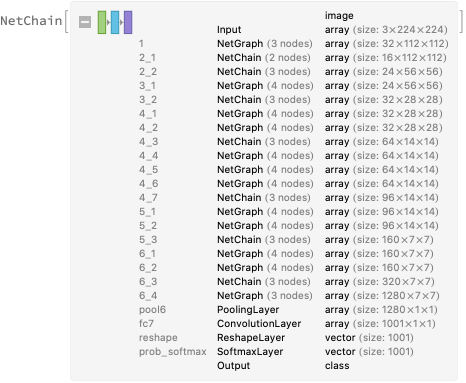

Remove the last three layers of the trained net so that the net produces a vector representation of an image:

In[]:=

extractor=NetTake[NetModel["MobileNet V2 Trained on ImageNet Competition Data"],"fc7"]

Out[]=

NetChain

Get a set of images:

Visualize the features of a set of images:

Visualize convolutional weights

Visualize convolutional weights

Extract the weights of the first convolutional layer in the trained net:

Visualize the weights as a list of 48 images of size 3x3:

Transfer learning

Transfer learning

Use the pre-trained model to build a classifier for telling apart images of dogs and cats. Create a test set and a training set:

Remove the linear layer from the pre-trained net:

Create a new net composed of the pre-trained net followed by a linear layer and a softmax layer:

Perfect accuracy is obtained on the test set:

Net information

Net information

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Display the summary graphic:

Export to MXNet

Export to MXNet

Get the size of the parameter file: