YOLO V3 Trained on Open Images Data

Detect and localize objects in an image

Resource retrieval

Resource retrieval

Get the pre-trained net:

In[]:=

NetModel["YOLO V3 Trained on Open Images Data"]

Out[]=

GeneralUtilities`Progress`PackagePrivate`$dynamicItem$2283

Label list

Label list

Define the label list for this model. Integers in the model’s output correspond to elements in the label list:

In[]:=

labels=;

Evaluation function

Evaluation function

Write an evaluation function to scale the result to the input image size and suppress the least probable detections:

In[]:=

ClearAll[IoU]IoU:=IoU=With[{IoUCompiled=Compile[{{box1,_Real,2},{box2,_Real,2}},Module[{area1,area2,x1,y1,x2,y2,w,h,int},area1=(box1[[2,1]]-box1[[1,1]])*(box1[[2,2]]-box1[[1,2]]);area2=(box2[[2,1]]-box2[[1,1]])*(box2[[2,2]]-box2[[1,2]]);x1=Max[box1[[1,1]],box2[[1,1]]];y1=Max[box1[[1,2]],box2[[1,2]]];x2=Min[box1[[2,1]],box2[[2,1]]];y2=Min[box1[[2,2]],box2[[2,2]]];w=Max[0.,x2-x1];h=Max[0.,y2-y1];int=w*h;int/(area1+area2-int)],RuntimeAttributes->{Listable},Parallelization->True,RuntimeOptions->"Speed"]},IoUCompiled@@Replace[{##},Rectangle->List,Infinity,Heads->True]&];

In[]:=

nonMaxSuppression[nmsThreshold_][dets_]:=DeleteCases[Function[detection,{detection[[1]],Function[overlapBoxLabels,Select[detection[[2]],#[[2]]>Max@Extract[overlapBoxLabels[[All,All,2]],Position[overlapBoxLabels[[All,All,1]],#[[1]]]]&]][Select[dets,(IoU[detection[[1]],#[[1]]]>nmsThreshold&&!(detection[[1]]===#[[1]]))&][[All,2]]]}]/@dets,{_,{}}];

In[]:=

netOutputDecoder[threshold_:.5][output_]:=Module[{probs=output["Objectness"]*output["ClassProb"],detectionBoxes},detectionBoxes=Union@Flatten@SparseArray[UnitStep[probs-threshold]]["NonzeroPositions"][[All,1]];Map[Function[{detectionBox},{Rectangle@@output["Boxes"][[detectionBox]],Map[{labels[[#]],probs[[detectionBox,#]]}&,Flatten@Position[probs[[detectionBox]],x_/;x>threshold]]}],detectionBoxes]];imageConformer[dims_,fitting_][image_]:=First[ConformImages[{image},dims,fitting,Padding->0.5]];deconformRectangles[{},_,_,_]:={};deconformRectangles[rboxes_List,image_Image,netDims_List,"Fit"]:=With[{netAspectRatio=netDims[[2]]/netDims[[1]]},With[{boxes=Map[{#[[1]],#[[2]]}&,rboxes],padding=If[ImageAspectRatio[image]<netAspectRatio,{0,(ImageDimensions[image][[1]]*netAspectRatio-ImageDimensions[image][[2]])/2},{(ImageDimensions[image][[2]]*(1/netAspectRatio)-ImageDimensions[image][[1]])/2,0}],scale=If[ImageAspectRatio[image]<netAspectRatio,ImageDimensions[image][[1]]/netDims[[1]],ImageDimensions[image][[2]]/netDims[[2]]]},Map[Rectangle[Round[#[[1]]],Round[#[[2]]]]&,Transpose[Transpose[boxes,{2,3,1}]*scale-padding,{3,1,2}]]]];detectionsDeconformer[image_Image,netDims_List,fitting_String][objects_]:=Transpose[{deconformRectangles[objects[[All,1]],image,netDims,fitting],objects[[All,2]]}];filterClasses[All][detections_]:=detections;filterClasses[classes_][detections_]:={#[[1]],Select[#[[2]],Function[det,MemberQ[classes,det[[1]]]]]}&/@detections;

In[]:=

Options[netevaluate]={TargetDevice->"CPU",AcceptanceThreshold->.5,MaxOverlapFraction->.45};netevaluate[img_Image,category_:All,opts:OptionsPattern[]]:=Module[{net},net=NetModel["YOLO V3 Trained on Open Images Data"];nonMaxSuppression[OptionValue[MaxOverlapFraction]]@detectionsDeconformer[img,{608,608},"Fit"]@filterClasses[category]@netOutputDecoder[OptionValue[AcceptanceThreshold]]@(net[#,TargetDevice->OptionValue[TargetDevice]]&)@imageConformer[{608,608},"Fit"]@img];

Basic usage

Basic usage

Obtain the detected bounding boxes with their corresponding classes and confidences for a given image:

Inspect which classes are detected:

Visualize the detection:

Network result

Network result

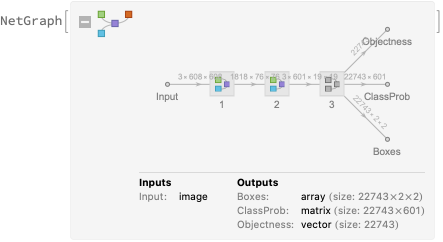

The network computes 22,743 bounding boxes, the probability of having an object in each box and the conditioned probability that the object is of any given class:

Visualize all the boxes predicted by the net scaled by their “objectness” measures:

Visualize all the boxes scaled by the probability that they contain an animal:

Superimpose the animal prediction on top of the scaled input received by the net:

Class filtering

Class filtering

Obtain a test image:

Obtain bounding boxes for the specified classes only (“Vehicle registration plate” and “Window”):

Visualize the detection:

Net information

Net information

Inspect the number of parameters of all arrays in the net:

Obtain the total number of parameters:

Obtain the layer type counts:

Display the summary graphic:

Export to MXNet

Export to MXNet

Get the size of the parameter file:

The size is similar to the byte count of the resource object: